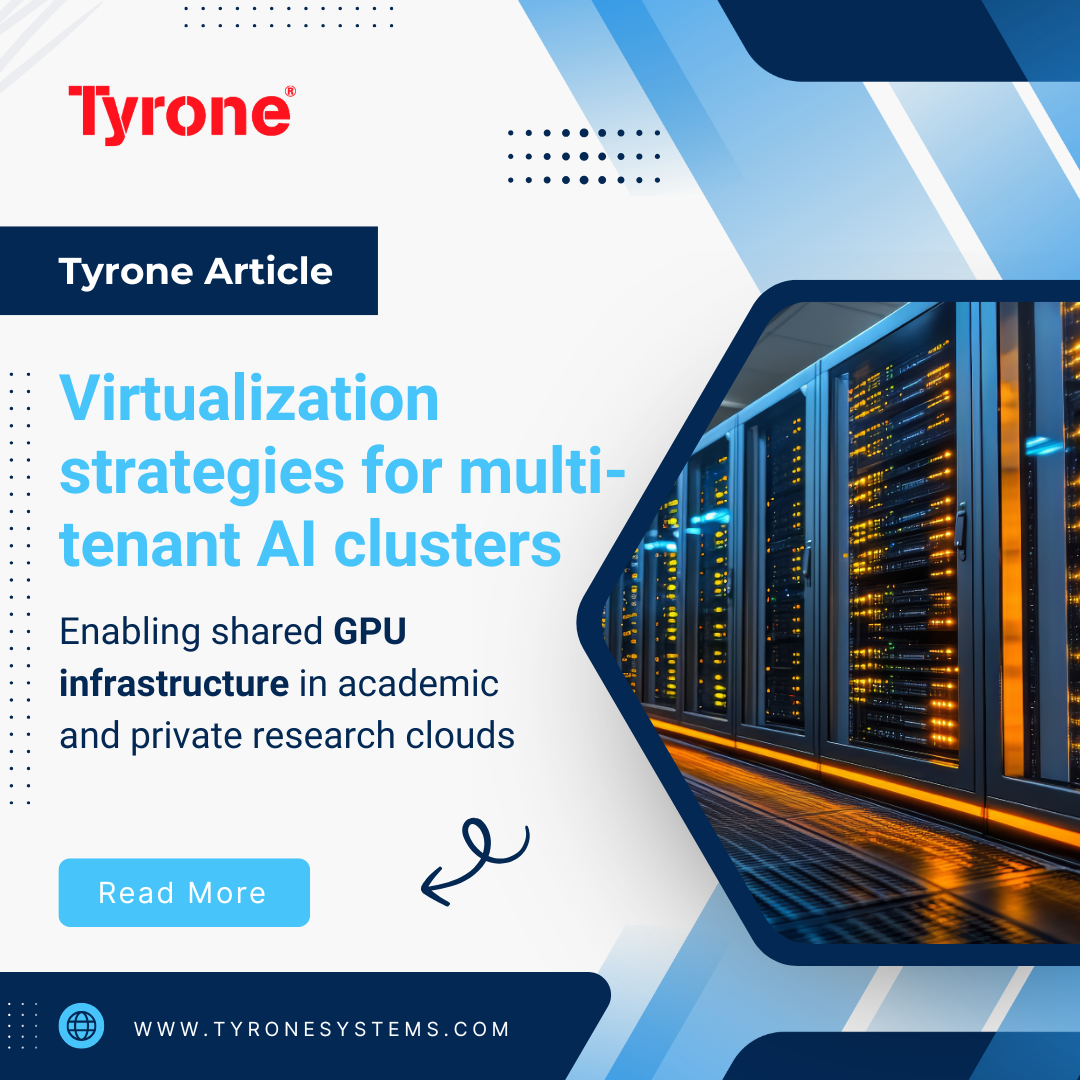

Introduction

The explosive growth of artificial intelligence in academic and private research has created an insatiable demand for GPU-driven compute. To balance cost with performance, many organizations are turning to shared, multi-tenant clusters. The idea is simple: allow multiple groups to access the same pool of GPU resources. The execution, however, is complex. GPUs do not behave like CPUs, fragmentation, topology dependencies, and isolation concerns make virtualization a serious challenge.

This article explores advanced strategies that make shared GPU clusters viable, with an emphasis on performance, reliability, and governance. The discussion is designed for stakeholders who need to assess infrastructure investments and operational trade-offs in academic and private research contexts.

The Core Challenges of GPU Sharing

Fragmentation and scheduling inefficiency

Unlike CPUs, GPUs are not naturally divisible into small, independent units. Most deep learning workloads require multiple GPUs allocated simultaneously, creating scheduling bottlenecks. In fact, a large study of multi-tenant clusters showed that nearly 47.7% of GPU cycles were wasted, with mid-sized jobs (16 GPUs) using resources least efficiently at just 40.4% utilization (Source: arXiv).

Performance sensitivity

AI workloads rely heavily on high-bandwidth interconnects between accelerators. If virtualization layers fail to preserve the efficiency of these connections, training times can be severely impacted. Topology mismatches or naive allocation policies can quickly erode the benefits of shared clusters.

Reliability risks

Multi-tenant clusters amplify the impact of hardware and job failures. A long-term study covering 150 million GPU hours revealed that larger jobs were disproportionately more failure-prone, while small jobs dominated cluster volume (Source: arXiv). This makes failure isolation an essential part of virtualization strategy.

Security and isolation

GPU containers alone are insufficient for strong tenant isolation. Vulnerabilities have shown that attackers can bypass container boundaries, escalating into the host environment. Stronger isolation models, such as hardware partitioning combined with virtualized boundaries, are needed to ensure safe multi-tenant operations.

Hardware Partitioning for Strong Isolation

One of the most effective approaches to GPU sharing is hardware partitioning. This strategy allows a single physical GPU to be split into multiple isolated instances, each behaving like a smaller, dedicated accelerator.

Dynamic partitioning frameworks can tailor instance sizes to match workload profiles. This approach reduced job completion times by nearly half compared to monolithic scheduling. Optimized placement strategies that balance partition size with job demand have also been shown to improve acceptance rates while reducing the number of active GPUs needed.

For research institutions where multiple teams compete for limited resources, hardware partitioning delivers both efficiency and predictability.

Time Multiplexing and Remote Execution

For workloads that are intermittent or light on GPU usage, time-based sharing models are valuable. In this approach, multiple tenants use the same GPU in alternating time slots, or forward GPU calls to a remote server that hosts the accelerator.

While performance varies depending on workload type, ranging from near-native throughput to slower speeds, this method is particularly effective for inference tasks or lightweight experimentation. Remote execution frameworks also allow clusters without dedicated GPUs in every node to access accelerators across the network, reducing redundant hardware investment.

Although not suitable for large-scale training, these techniques complement hardware partitioning by ensuring idle GPU capacity can be efficiently reused.

Topology-Aware Resource Allocation

GPU clusters are often built with complex interconnects, PCIe hierarchies, NUMA domains, and high-bandwidth links between accelerators. Ignoring these topologies during scheduling can cause bottlenecks that significantly degrade performance.

Topology-aware allocation methods address this by analyzing communication patterns in distributed jobs and mapping them onto physical layouts that minimize latency.

By combining topology-aware scheduling with partitioning, clusters can achieve both efficient utilization and high performance, ensuring that multi-tenant sharing does not come at the expense of speed.

Cooperative Abstractions Between Tenants and Providers

A recurring challenge in shared infrastructure is the lack of transparency: providers manage resources without insight into workload characteristics, while tenants lack visibility into hardware constraints. This disconnect leads to inefficiency.

Cooperative abstractions aim to bridge this gap. Tenants can provide limited information about workload priorities or fault tolerance requirements, while providers expose simplified details about cluster topology or resource limits. By aligning expectations on both sides, scheduling and allocation can be optimized in ways that improve fairness and utilization.

For research clouds that serve diverse departments or external partners, this cooperative model ensures resources are allocated in a manner that balances efficiency with trust.

A Strategic Path Forward

For stakeholders, enabling shared GPU infrastructure is best approached in phases:

- Begin with hardware partitioning to ensure isolation and predictable performance.

- Add intelligent placement algorithms that take communication topology into account.

- Introduce time multiplexing and remote execution selectively for lighter workloads.

- Move toward cooperative abstractions that allow tenants and providers to collaborate on optimization.

This staged adoption ensures incremental gains without destabilizing existing operations, while steadily improving utilization and reducing cost.

Benefits for Stakeholders

Adopting these virtualization strategies brings tangible advantages:

- Capital efficiency by reducing the number of dedicated GPUs required per tenant.

- Performance stability through partitioning and topology-aware scheduling.

- Governance and fairness with stronger isolation and policy-based controls.

- Operational flexibility through dynamic allocation, failure isolation, and support for a wide range of workload types.

Conclusion

The era of siloed GPU ownership is giving way to a model of shared, virtualized infrastructure. With hardware partitioning, topology-aware allocation, cooperative abstractions, and selective time multiplexing, academic and private research clouds can deliver high-performance, multi-tenant GPU clusters that are both cost-efficient and secure.

For stakeholders, the choice is not whether to adopt these strategies, but how quickly they can integrate them to meet rising AI demands. The payoff is clear: greater utilization, reduced capital expenditure, and a sustainable path for scaling research infrastructure.